Rust, Lambda and CDK

Squeezing the most out of Lambda

Maxime David's lambda-perf provides an in-depth comparison of different programming languages' performance on AWS Lambda. One standout aspect is the incredibly fast cold start times for Rust - clocking in at around 15 milliseconds. This is a significant advantage, particularly in serverless architectures where cold start latency can impact the performance of your applications.

The low memory footprint and high throughput of Rust Lambdas make it an optimal choice for cost-effective Lambda functions.

Why isn't everyone using Rust on Lambda?

Historically it hasn't been the easiest process to write, build and deploy Rust Lambdas, as tooling around such processes was lacking official support.

- Building needed to be done through docker, which was painfully slow.

- The aws_lambda_events crate was just transpiled from the go library and it wasn't uncommon to find unimplemented or out of date event types.

- Serverless plugins and CDK constructs struggled to have the user base to keep them from going stale.

All in all it wasn't the most optimised or stable environment for development.

I recently revisited the ecosystem and I am shocked at just how much it has progressed:

- Dockerless building can be done using cargo lambda.

- AWS released the official SDK.

- There is a CDKv2 construct that is feature complete.

- The AWS Rust runtime is under constant development.

In this post I would like to go over the process I use for building serverless applications with Rust, Lambda and CDK.

Prerequisites

The following will be required for completing this guide.

Setup

Lets setup the project by:

- Create a new directory for our project, we are naming our project

rust_cdk_demo. - Initialize our CDK project

- Initialize our rust project

CDK Setup

mkdir rust_cdk_demo

cdk init app --language=typescript

Cargo Lambda Setup

For this example we will just go with the default answers to the questions it asks.

cargo lambda init

# > Is this function an HTTP function? No

# > AWS Event type that this function receives

Our directory should now look something like this:

rust_cdk_demo

├── Cargo.toml

├── README.md

├── bin

│ └── rust_cdk_demo.ts

├── cdk.json

├── lib

│ └── rust_cdk_demo-stack.ts

├── package-lock.json

├── package.json

├── src

│ └── main.rs

└── tsconfig.json

Rust Lambda Function

cargo lambda init has created a base template for us to work.

Lets take a look at the main.rs that is generated for us.

use lambda_runtime::{run, service_fn, Error, LambdaEvent};

use serde::{Deserialize, Serialize};

#[derive(Deserialize)]

struct Request {

command: String,

}

#[derive(Serialize)]

struct Response {

req_id: String,

msg: String,

}

async fn function_handler(event: LambdaEvent<Request>) -> Result<Response, Error> {

let command = event.payload.command;

let resp = Response {

req_id: event.context.request_id,

msg: format!("Command {}.", command),

};

Ok(resp)

}

#[tokio::main]

async fn main() -> Result<(), Error> {

tracing_subscriber::fmt()

.with_max_level(tracing::Level::INFO)

.with_target(false)

.without_time()

.init();

run(service_fn(function_handler)).await

}

Lets break this down so we can understand what is going on.

Main

#[tokio::main]

async fn main() -> Result<(), Error> {

tracing_subscriber::fmt()

.with_max_level(tracing::Level::INFO)

.with_target(false)

.without_time()

.init();

run(service_fn(function_handler)).await

}

This is the entry point of the binary that will run when an instance of our lambda starts.

The lambda_runtime::run function manages all of the communication between Lambda and our binary.

Whilst the function_handler is the entry point of our lambda function.

Request & Response

#[derive(Deserialize)]

struct Request {

command: String,

}

#[derive(Serialize)]

struct Response {

req_id: String,

msg: String,

}

As we are doing a direct invocation lambda, we can define our request and response as structs. We can add the Deserialize and Serialize to the Request and Response structs respectivley in order to automatically convert to and from JSON payloads.

Handler

async fn function_handler(event: LambdaEvent<Request>) -> Result<Response, Error> {

let command = event.payload.command;

let resp = Response {

req_id: event.context.request_id,

msg: format!("Command {}.", command),

};

Ok(resp)

}

If we look at the handlers signature you will notice that the input to our handler is LambdaEvent<Request> struct.

Finding the definition of this in the lambda_runtime crate tells us that LambdaEvent<T> also contains the lambda context where can access AWS specific metadata about the request.

/// Incoming Lambda request containing the event payload and context.

#[derive(Clone, Debug)]

pub struct LambdaEvent<T> {

/// Event payload.

pub payload: T,

/// Invocation context.

pub context: Context,

}

Build

Thanks to cargo-lambda we no longer need to build our application on docker.

Run, Build, and Deploy Rust functions on AWS Lambda natively from your computer, no containers or VMs required. You can read more about this game changing project here

To build our lambda we can run:

cargo lambda build

This creates the target/lambda directory, which is where our compiled binaries will end up and we should have a binary that is capable of running on.

But now we want to get it deployed to Lambda using Infrastructure as Code.

Deploy

The rust.aws-cdk-lambda package works great for deploying Rust Lambda functions through CDK, it supports cargo lambda by default.

Lets install it using

npm install -D rust.aws-cdk-lambda

Now we can define our lambda function in our base CDK stack.

import * as cdk from "aws-cdk-lib";

import { Construct } from "constructs";

import { RustFunction } from "rust.aws-cdk-lambda";

export class RustCdkDemoStack extends cdk.Stack {

constructor(scope: Construct, id: string, props?: cdk.StackProps) {

super(scope, id, props);

new RustFunction(this, "RustLambda", {

directory: "./",

bin: "rust_cdk_demo",

});

}

}

We can now run cdk deploy and we should see the following output:

🍺 Building Rust code with `cargo lambda`. This may take a few minutes...

🎯 Cross-compile `rust_cdk_demo`: 66.607778s

✨ Synthesis time: 74.19s

...

✅ RustCdkDemoStack

✨ Deployment time: 47.72s

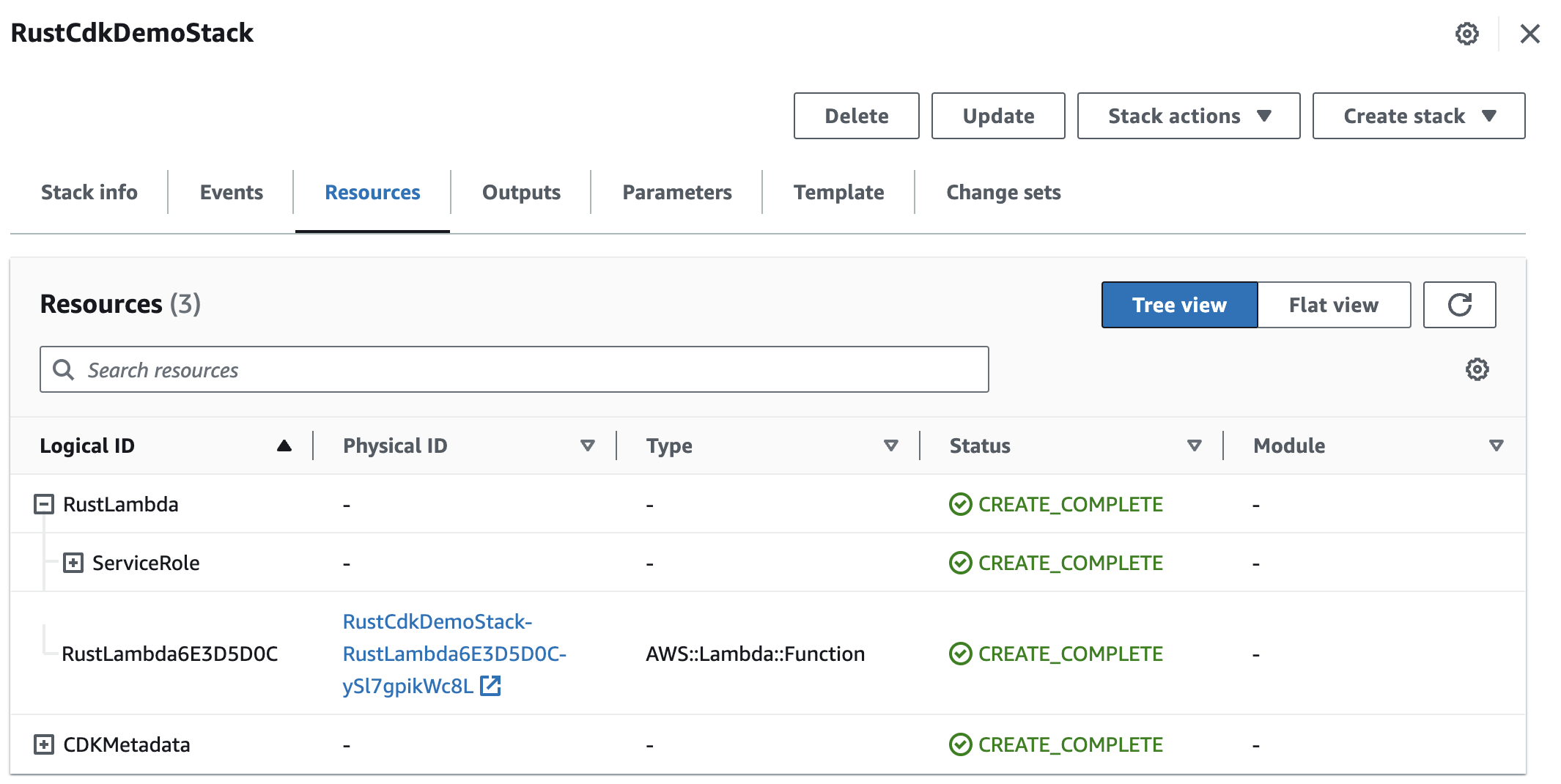

If we check the console we can see that our stack has now deployed.

Invocation

Lets navigate to our lambda and test it with our payload Input:

{ "command": "Blazingly Fast!" }

Output:

{

"req_id": "5703227f-8b95-48ca-8787-05725461c7d0",

"msg": "Command Blazingly Fast!"

}

Success!

Duration: 1.31 ms Billed Duration: 24 ms Memory Size: 128 MB Max Memory Used: 15 MB Init Duration: 21.93 ms

22ms cold start too!

Hopefully this has encouraged you to give Rust Lambdas a go.